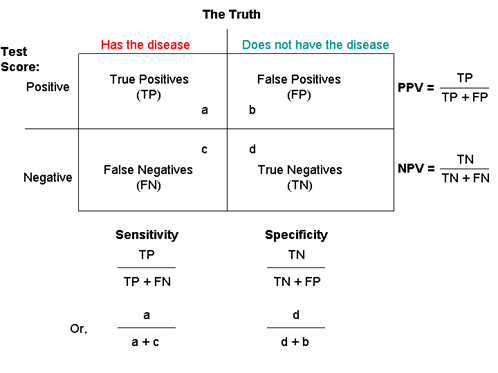

A: Good question! Sensitivity and specificity are characteristics of a medical test that help us determine how useful that test is and how to interpret the result.

BUT, they aren’t the be all and end all. We also want to know the positive and negative predictive value. Strap in for the ride and let’s talk stats!

Sensitivity tells us how often the test is positive for someone who actually has a disease (called a true positive). In other words, “If you have the disease when you take the test, what is the chance it comes back positive?” A very sensitive test will correctly identify most people who have the disease and has a low false-negative rate. False negatives are when the test is negative even though that person does have the disease. If we have a test that is 99% sensitive, it will identify 99% of people who have the disease. 1% of people with the illness would be missed and falsely told they did not have the disease.

Specificity tells us how often the test is negative for someone who doesn’t have the disease (called a true negative). In other words, “If you don’t have the disease when you take the test, what is the chance it comes back negative?” A highly specific test will correctly show almost everyone who doesn’t have the disease and will not have a bunch of false-positive results. False positives are when the test is positive even though the person doesn’t have the disease. A 99% specific test will correctly identify 99% of people who don’t have a disease and will incorrectly give a positive result to 1% of people who don’t have the disease.

Sensitivity and specificity are helpful when we are thinking about how to interpret a test result. No test is perfect, and all tests will have some false positives and false negatives. False positives and negatives are the bane of testing because we don’t want to miss a disease and we don’t want to tell people they have a disease they don’t actually have. When clinicians are ordering a test, they look at the sensitivity and specificity to help them interpret the result. It’s important to know the odds of a false positive or false negative.

“That’s fun,” you say. “How incredibly useful!” It is but slow your roll. Sensitivity and specificity are important but not the only numbers that matter. It may seem counterintuitive, but the prevalence of a disease really impacts how you interpret a test result. How do we know the prevalence? We use a “gold standard.” This is a test that gives us the true result no matter what. You may be wondering why we need another test if we have a gold standard. Often, the gold standard is not a test we want to do to people. It can involve things like surgery, autopsy, or other invasive, expensive, and not-so-fun things. So getting back to our stats: we calculate a test’s positive and negative predictive value which take into account sensitivity and specificity as well as how common a disease state is.

Positive predictive value tells us how likely it is for someone who has a positive result on a test to actually have the disease (“If I have positive test, what is the chance I have the disease?”). A positive predictive value of 99% means that if you got a positive test result, 99% of the time you truly do have the disease. Negative predictive value tells us how likely it is for someone who has a negative result to not have the disease (“If I have a negative test, what is the chance I don’t have the disease?”). A negative predictive value of 99% means that if you got a negative test result, 99% of the time you don’t have the disease.

Getting confusing, right? Let’s try an example to clarify.

We have 100 people in a room for a party and some of them are werewolves. We need a really good werewolf test, because we don’t want to miss any werewolves and we certainly don’t want to call someone a werewolf who isn’t. In addition to our new werewolf swab (patent pending), we also need a gold standard. In this case, we’ll wait for the full moon when the wolves will all turn. First we swab everyone, then the moon reveals there are 50 people in the room who are werewolves! So the prevalence in this room is 50%. (Yikes!)

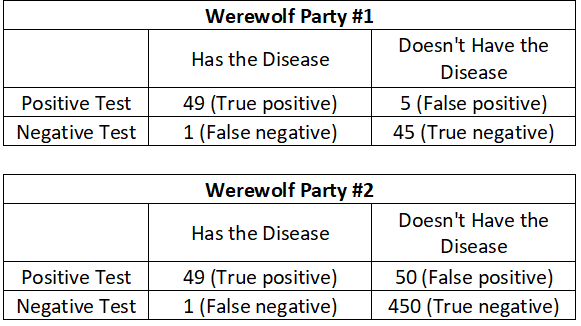

The test identifies 54 people as being werewolves: 49 of those are in fact werewolves (true positives) and 5 aren’t (false positives). The test was negative for 46 people: 1 who is a werewolf (false negative) and 45 who weren’t (true negative). Look at the Werewolf Party #1 table.

The sensitivity of the test is 98%. We calculate this by dividing the number of true positives (49 people who had the disease AND a positive test) by the total number of people who have the disease (50 true positive AND false negatives).

The specificity is 90%. This is calculated by dividing the number of true negatives (45 people who test negative and don’t have the disease) by the total number true negatives and false positives (50 people who don’t have the disease).

The positive predictive value for this test is 90.7%. This is calculated by dividing the number of true positives (49 people who have the disease and a positive test) but the total number of people who had a positive test (54 true positives AND false positives). This means someone with a positive test result has a 90.7% chance of actually being a werewolf. Not too shabby.

The negative predictive value for this test is 97.8%. This is calculated by dividing the true negatives (45 people who don’t have the disease and have a negative test) by all the people with a negative test (46 true negatives AND false negatives). Someone with a negative test result has a 97.8% chance of not being a werewolf. This test is really good at ruling OUT werewolfery.

What if everyone invited a few non-werewolf friends to the gathering and now there are 500 people in the room? How does that change things? Let’s look at the Werewolf Party #2 table.

At this party, the prevalence is much lower with only 10% of the party goers being werewolves (50 people out of 500 are werewolves). But the sensitivity and specificity of the test didn’t change. The sensitivity remains 98% (calculated as 49 true positives divided by 50 people with the disease). The specificity remains the same at 90% (calculated as 450 true negatives divided by 500 people who don’t have the disease). The positive and negative predictive values change significantly though! Now, the positive predictive value is only 49.5% (calculated as 49 true positives divided by all 99 positive test results). That’s way less good and now is about as useful as flipping a coin. The negative predictive value is now 99.8% (calculated as 450 true negatives divided by all 451 negative test results). As the prevalence decreased, the test was less useful at telling if someone was a werewolf but more useful at ruling it out. This is why knowing how likely a disease is before you get the test (also called the pre-test probability) really matters.

Stay safe. Stay well. Enjoy stats. Avoid werewolves.

Those Nerdy Girls

Want more fun with this? Check out these links:

Understanding medical tests: sensitivity, specificity, and positive predictive value [archived link]